I didnt mean to spend so much time playing with the graphics,,, honest !!

This week featured a number of student presentations, and I presented my work from 1st semester with the amazing and descriptive title, Parabolic function as iterated through colour and melodic transforms. This featured as a sound component, 12 simultaneous melodic lines using sine tones generated from an iterative function describing the shape of a parabola.

Here follows a somewhat lengthy discourse (for a blog) on sine tones and their use in this particular piece. The quintessential element has been paraphrased in the linked comment which you could peruse instead and save yourself possible minutes of life. Otherwise, you could continue reading ....

After the playing of my piece there was a comment from David Dowling afterwards something along the line of "Did you think of putting some sort of modulation on ? Sine waves get a bit old after a couple of minutes !". This has gotten me thinking ....

why do i like sine tones ? This has two aspects. (1) from a melodic aspect, I like their timbre (or lack of :), the way they actually sound when used in a melodic context. (2) From a harmonic aspect, they can be used in such a variety of ways with little concern for clash of timbral colours - their lack of overtones gives them such ease of combination.

More widely speaking when dealing with wide frequency/ pitch choices, the choice of timbre becomes very important. Generally speaking, a timbre that sounds good at one pitch will not sound good 3 octaves above or 3 octaves below - this requires then a pitch based modulation upon the timbre to shape the sound as appropriate. Or, one could treat frequency along a similiar line to which John Delaney has in his piece BinHex_25-06. Here there was distinct frequency based timbral delineation - not unlike an ensemble of actual instruments, where there own unique characteristics create possible frequency/pitch bands. Hence one could create a number of timbres with attached frequency limits, and divide a broad frequency output into its appropriate voice.

Or, use a sine tone.

The harmonic aspect has particular importance when considered in the light of "computer music" (I use the term here to denote the style of music associated with both computer aided composition and creation - an obvious example is using a mathematical equation to describe the entire melodic and harmonic output). I feel that in this aspect, my use of formulaeic derived methodology to replace the overt human aesthetics (eg pitch/rhythmic choices), requires the use of simpler, purer tonality to represent the audio output. The inclusion of richer timbres creates denser levels of complexity through the interaction of their extended harmonic components - this is what I consider undesirable (although not necessarily strictly so - indeed these denser complexities have their own desirability). In my particular work, I desired the purity of sine tones to help reveal the inner working of the mathematical process, their interactions were desired as a particular mental abstraction overlayed onto what I conceived as a continually morphing spatial panorama where overtones would only create muddy patterns.

That said, with the retrospective awareness of my piece and the cogitation/analysis engendered by its presentation. I feel I could extend it further -

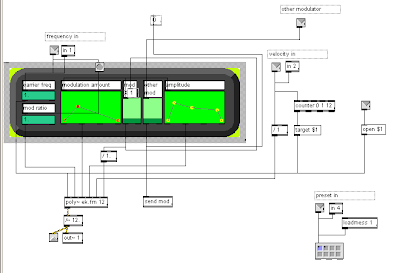

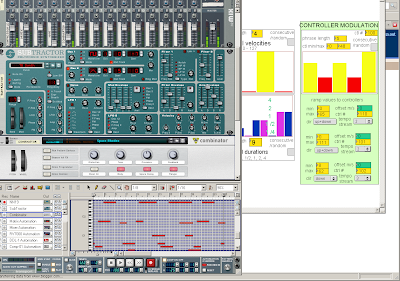

At the present, the patch generates 12 independent tones. These tones are given a duration, constant amplitude and pitch (the pitch is given a start and end value with a constant rate of change).

In the simplest way the shape of these generated tones needs to be modified along the lines of the following ; duration (the minimum possible could be shortened), amplitude (an envelope over time - rather than constant amplitude), pitch (an envelope of changing pitch inclinations, rather than constant change).

The tones are also given a stereo value, where one of the stereo channels is delayed by an amount. This could be modified with with the delay being also based on phase position, hence more importance on the frequency value related to stereo.

hmmmm, apart from that SINE TONES ROCK !!!!

or something :P

Various students. Student presentations. Level 5 Schulz building, Adelaide University. 11 September 2008